The author of this topic had the same error.

However, there is no reason why the screens should not be switched programmatically with Companion. And there was no problem / error even after switching the screens several times.

In short: there is no reason to switch screens manually with Companion.

I don't know if I understood correctly.

There may be no reason, but there shouldn't be a error. There is no error in the android version. The iOS version should work the same.

Sometimes it is necessary to switch screens manually in order to test the user interface on other screens.

There were multiple race conditions* involved in this bug.

The first involves the screen transition in the companion app and the transition in the editor. Unlike in the Android version, we actually leverage the built in functionality of iOS to represent the view stack. This is particularly important because Android (at least up until Android 13) always provided a back button either as a soft key or in some phone as a physical button, so without making use of the UINavigationController the user would have no way of naturally transitioning back in the screen hierarchy like they do on Android. The second part of this is that iOS has a sequence of events that occur during the transition to the new screen, and we chose to make the new screen officially active in the viewDidAppear: callback, and for the most part this has been fine. Independently, a message is returned to App Inventor to let it know that it should switch to the newly opened screen, which it does, and then it sends the code to actually draw the screen back to the companion app. In the older legacy mode, there was enough of a delay here that the UI transition on the device had completed before the code to draw the screen was received, so all was good. However, with the transition to WebRTC it can sometimes be the case that the code to draw the screen appears before it activates, so the previous screen gets drawn over and the new screen (which is blank by default) appears on top of it. This is one potential scenario leading to the blank screen phenomenon.

The second race condition is conceptually tied to this but slightly different, and is the one we're seeing in this thread. When an event is raised in App Inventor to run the user's blocks, a check is performed called canDispatchEvent. If the event can be dispatched, then the Scheme code for that event block is run. However, in the iOS version we made a premature optimization where if the event is run we also make the Screen active, which turns out to be wrong when dealing with events during screen transitions. In particular, when using this app in its normal flow, it's highly likely that one of the two textboxes has focus when the button to compute the BMI is clicked. Clicking the button starts the transition to Screen2 (as described above) but then the textbox fires its LostFocus event, which swaps out the newly created Screen2 object for Screen1, at which point the code now arrives from App Inventor to draw the contents of Screen2 but they end up on Screen1 and Screen2 appears blank. However, if you click the button without having focused the textbox everything works as expected.

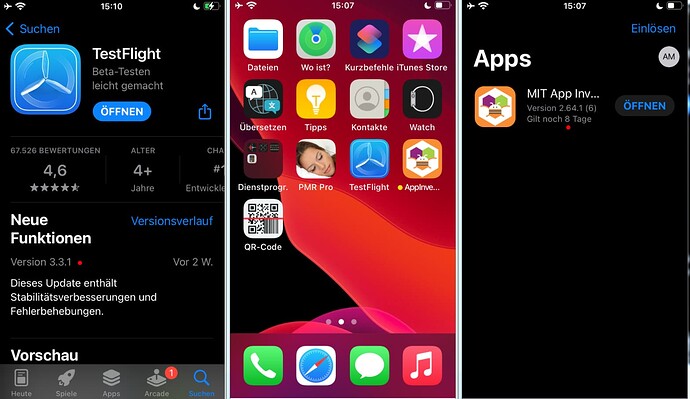

Both of these race conditions should be fixed as of 2.64.2 build 7 on TestFlight.

* A race condition here is a term of art to describe two or more processes running in parallel where we expect the same outcome regardless of which process finishes first, but the actual outcome ends up depending on the order the processes finish (such as in a road race).

Why shouldn't this be possible via the app itself?

I also saw this error when testing with the version in the App Store, but I have been unable to replicate it with the version in TestFlight. Which version were you running when you observed that error?

I can understand why this would be useful. In particular, if there is a lengthy sequence of steps to get deep enough into the app to perform testing it can be helpful to have ways to shortcut all the intermediate state. Old video games for example used to have cheat codes or other ways to jump to different levels for testing.

For example, if I'm fixing a bug in App Inventor, every time I need to rebuild the companion, load it onto my device, then scan the QR code to connect up to the project that triggers the bug. Sometimes it would be nice to just compile and then run the test project but we don't have an apparatus for that yet (and then of course you'd still have to simulate whatever inputs are required to trigger the bug, if any).

I can understand why this would be useful. In particular, if there is a lengthy sequence of steps to get deep enough into the app to perform testing it can be helpful to have ways to shortcut all the intermediate state.

Sorry, but I don't understand where the difference should be, whether I switch to a screen manually or e.g. via a button within the app.

If certain procedures (events) should not be executed on the newly opened screen (for test purposes), I will have to disable them beforehand anyway. To do this, I would first disconnect Companion, make the adjustments on this screen and only then connect to Companion.

Ok. Thanks for checking. We are maintaining multiple groups on TestFlight and it looks like you're in a beta testing group that hasn't received the latest version. I've just pushed the 2.64.2 (9) build out to everyone in your particular group. If you continue to get the error related to an unknown screen please let me know.

I've just pushed the 2.64.2 (9) build out to everyone in your particular group. If you continue to get the error related to an unknown screen please let me know.

Thanks, everything is working fine now.