I thought AI2 only read text files.

Please download and post each of those event block(s)/procedures here ...

P.S. These blocks can be dragged directly into your Blocks Editor workspace.

See Download Block Images for a demo.

I thought AI2 only read text files.

Please download and post each of those event block(s)/procedures here ...

P.S. These blocks can be dragged directly into your Blocks Editor workspace.

See Download Block Images for a demo.

How did you get your byte code into an AI2 list ?

I'm using SAF. ReadAsByteArray

It's not the problem to read file as binary (I'm using SAF extension). The problem is in extracting 1000 elements from the list with 1.000.000 elements.

'For each item/number" block is simply closing the app and SLICE LIST block is giving the stack error when you try to extract elements already from 60000

Yes, and ...?

Oh, it has gone up from 100,000 to a miliion ?

I prepared a simple AIA with the possibility to read a file as binary using SAF (maybe it will be useful for you  ) and then trying to slice the list. The result is STACK ERROR

) and then trying to slice the list. The result is STACK ERROR

Prova.aia (33.4 KB)

Thank you Taifun. I was thinking about your SQLite extension (i've bought it already from you and i've used it successfully in my APPs - It's PERFECT!! ) but to load 2-3 millions of records to DB each time when i read a file seems to be a little bit excessive (and will also take a lot of time).

SLICE LIST block will be perfect - maybe somebody will be able to correct this problem (i prepared an aia that shows an error)

You can't shard your data to load subsets?

Please compile an attached aia and select any file bigger than 800KB from your smartphone - you will receive a STACK ERROR during the "slice list" execution for extracting of elements from index = 700.000

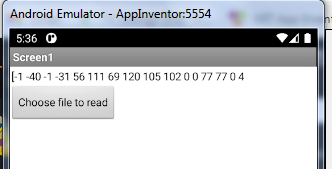

This is my first experience with a SAF ByteArray.

Is it text, or is it an AI2 list?

If it is text, why not use the text segment block?

If it is a list, why the SPLIT At SPACES?

Have you tried: JavaScript arrayBuffer.slice() Method ?

Its a text where each byte from the file is a number (digits). Each byte number is separated from the next by space. The length of each number is different (from 1 to 127 and it can be also minus before it).

Unfortunately i don't know how to use JavaScript from AI2. Could you please give me a link to read?

Search the community for how to run javascript

To "Elaborate"....

Using these blocks, based on @ABG 's suggestion to do the split using segment

worked for me on image files upto @ 2.5mb in size, after that it crashed with an OOM error.

Suggest for larger file sizes you segment the byte array into usable chunks, from the beginning.

This was tested using companion app on Android 13 (Google Pixel 4a)

The byte list looks like strange JSON:

Note the leading '[' but spaces instead of commas.

Text segment is likely to split midst number.

True, hadn't thought of that ![]()

List operations might speed up if you avoid high item numbers, since they are linked lists.

Copy the list.

Loop until index1, removing item 1 as you go.

Loop until index2-index1, adding onto a separate chunk and deleting as you go.

If that's not fast enough, use a Clock Timer and a Progress Notifier, 1000 bytes per chunk.

Brush up on your Javascript.

Thank you ABG. Removing items i was able to double the speed of elaboration for list with 25000 elements

I checked all variants and this is the resume:

In order to elaborate huge strings (byte by byte) or huge lists (element by element) without STACK ERROR or the application crash its necessary to divide this string/list in small pieces using Clock timer.

The extraction of each piece can be done by:

This topic was automatically closed 7 days after the last reply. New replies are no longer allowed.